Tuesday, 23 October 2012

Benefits from standartised APIs

Interoperability among platform has improved greatly with Service-oriented Architecture (SOA), but Application programming interfaces (APIs) for Cloud computing are still mainly proprietary and vary between different provides. This is done to keep users on the same platform, as they cannot easily migrate their software from one site to another.

However, this form of "digital communism" is not acceptable nowadays, so a standartised model of APIs should be adopted sooner or later. This will be beneficial to end users, because if they can spread their data among multiple cloud providers, they won't lose it if one of them suffers failure.

Furthermore, the introduction of standartised APIs will enable a public cloud to take over the excessive load upon a private cloud or a data center, because they will "speak the same language".

Security-related issues in Cloud Computing

The exponential increase of the number of cloud computing providers and services over the past few years has logically brought a series of security-related issues, that need to be addressed in order for this form or computing to continue to grow with the same pace.

Anyone who has decided to let a cloud computing provider host their critical data and/or applications with all the benefits from that, needs to be aware of the security threats that lay within.

Simply put, security issues could be divided into two categories, according to their origin - inside or outside the cloud.

Part of external security is the presence of an up-to-date firewall system that blocks external threats from hackers and monitors the credentials of anyone accessing the cloud. However, firewalls are a must these days and all respectable cloud provides keep an eye on their incoming traffic and invest a lot in borderline security, so user data is secure from this point of view.

Another part of external security is the pure physical security of the cloud hardware. Access to the storage area should be tightly monitored equally on all locations where data is backed up.

Internal security, however is a more delicate issue. Unlike large data centres, the internal security within a cloud is a shared responsibility between all the parties involved - provider, users and intermediate third-party vendors.

For instance, application-level security is a client's responsibility. Platform-level security is shared among the user and the operator, while the cloud provider is responsible for physical and external security.

Basically, the deeper level of control within the cloud, the more critical security measures should be.

As the cloud environment is a shared environment, users need to be protected from one another. The main mechanism for this is virtualisation. Despite being the best technique to separate users, not all resources can be virtualised and issues may occur.

Saturday, 13 October 2012

MVC Approach

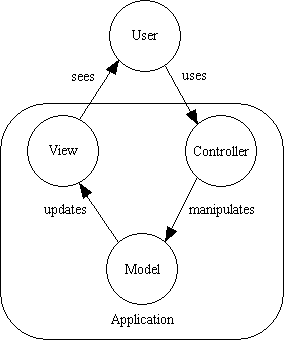

The MVC (Model-View-Controller) approach is an architectural design method in programming, based on the separation of the business logic from the GUI (Graphical User Interface) in a specific application. This design method was first used in the Smalltalk programming language. As its name suggests, it consists of three main components.

Model

This is the core of the application.Usually, this is the database we are working on and its state. For example, in a banking application this would be the customer database, their accounts, transactions and the allowed banking operations they are eligible for.

View

This part of the application source code is responsible for how data is visualised. It would selectively highlight specific attributes of the Model and suppress others, so it can be regarded as a presentation filter. It also keeps track of the client's platform and makes sure the appropriate GUI is used for optimum performance.

Controller

The Controller could be regarded as the "brain" of the application. It decides what the client input was, how the Model needs to change as a result to this input and what resulting View should be used accordingly.

This approach can be applied in web-based information systems by using Java EE by employing the thin-client method that places almost the whole MVC framework on the server. By doing so, the client is only expected to send data forms or desired links to the Controller module, which, based on the requirement, interacts with the Model to generate the data needed and when the response is generated, it is displayed to the user by the View. In this particular method, the Model could be represented by JavaBeans or EJBs, the View could be given presentation functionality by JSFs and the Controller could use a Servlet to mediate between the Model and View modules.

Friday, 12 October 2012

JSP vs Servlets

Both JSP (Java Server Pages) and Servlets are APIs (Application Programming Interfaces), whose aim is to provide means of displaying dynamic content within a web browser. Servlets are Java objects that dynamically handle requests and create responses. The Servlet API allows a developer to add dynamic content to a web server using Java platform. JSP, on the other hand, is a server-side Java technology that can generate dynamic web pages with HTML, Java script, JSP directives and elements, as well as other document types, as a response to a user request to a Java web server. JSP are compiled to servlets before launching them. Therefore, servlets can do anything that JSP can do, respectively

Coding in JSP is relatively easier than in servlets, as JSP have simple HTML-like syntax, while servlets use Java syntax for data processing and HTML for output, with hundreds of println statements that can be quite a bother at times, to say the least. In simple words, servlets can be regarded as HTML in Java, and JSP - Java in HTML! It is worth mentioning also that servlets run faster than JSP and provide quicker response, due to the fact that they are already compiled and running, while JSP needs to go through the interpreter to generate the HTML output code.

Since both servlets and JSP are from Java, they are easily translated one into the other. Nowadays, developers often use both in conjunction to combine their benefits - JSP's easy coding and the servlets' speed advantage.

How do Servlets handle concurrent HTTP requests?

As Servlets support multi-threading by default, so whenever an HTTP client request comes in, the servlet immediately initiates an independent thread to handle the request. This is how a servlet handles all concurrent requests.

However, if the request count at a given moment exceeds the maximum number of allowed threads per servlet, the server running the servlet can either deny further requests, or start a new instance of the same servlet to handle the extra load - it only depends on the specific settings.

Thursday, 11 October 2012

Servlets vs CGI

Servlets vs CGI

Choosing Servlets over CGI has many advantages, but the main ones are:

1. Platform indepencence. As servlets are written in Java only, they can run on any servlet enabled web-server, whatever the platform - Windows, Unix, Linux, etc. For instance, if an online application is developed on a Windows machine with Java web server, it can easily be executed on an Apache web server without further alteration of the code.

2. Performance. Servlets run a lot faster than CGI scripts. This is due to the fact that once the initialization of a servlet has finished during the first user request, it remains in the server's memory as a single instance. If a new request comes in, it simply starts a new thread. Traditional CGI scripts start a new process every time a request has been received.

3. Extensibility. As Servlets are written in Java, which is a well-designed, robust and objectoriented language, they can be easily transformed into new objects, thus providing ideal solutions in a fast-paced business environment.

4. Security. Servlets adopt Java's well-known security features, such as exception handling, memory management, etc. A servlet can only be executed by a contained within a restrictive environment, called a sandbox, reducing the risk imposed of harmful code.

However, CGI does have its advantages, that have to be taken into consideration. They can be written in more than one language, but practice shows that they are mainly written in perl. They also run in their own OS shell, so they can't cause any concurrency conflicts.

Wednesday, 10 October 2012

Service-Oriented Architecture

What is SOA, or Service-Oriented Architecture?

In simple words, this is a style of structuring applications in such a way, that they consist of discrete and reusable software modules, each of which has simple and distinct interfaces for data input/output. These modules communicate by sending messages to each other over a network in a legacy "language".

SOA have become the main technology of IT business organisations, as they provide a flexible working model that is able to respond to rapid changes in the fast paced business environment.

Differences between a service and a component.

A component is usually realized in a specific technology, so only clients compatible with this technology could communicate and interoperate with it at very high speeds of processing and communication. However, they are not suitable for mass deployment.

On the other hand, a service accesses the network in a standartised, legacy way, which allows them to communicate freely with any other service available.

SOA has many advantages, but below are the most significant ones:

1. Mobility (transparency). An organisation has the flexibility to move different services to other machines or even providers, without affecting its clients' use of the service. By method of dynamic binding to a service, clients don't even need to know where the service is physically located.

2. Higher availability. Because of location transparency, multiple instances of a service could be executed simultaneously on more than one server. If one machine goes down for a particular reason, a dispatcher redirects user requests to another instance of the service without the client even knowing about this.

3. Scalability. Also due to location transparency, if a client needs more resources or processing power for a specific task over a period of time, a load-balancer could forward requests to multiple service instances simultaneously to get the job done.

4. Interoperability. Since services use standard communication protocols, end clients could directly communicate with a service without the use of interpreters.

5. Modularity. The modular architecture of SOA-based applications allows for improvements within a specific service, if needed, without affecting the overall application as a whole.

6. Reusability. One of the core principles of SOA is reusability. It means reutilisation of existing assets, rather than architecturing a new application from scratch. If a specific module has proved to be successful, it can be reused in future products, which results to lower expenditures and faster time-to-market deployment.

Tuesday, 2 October 2012

Process vs Thread

Process vs Thread

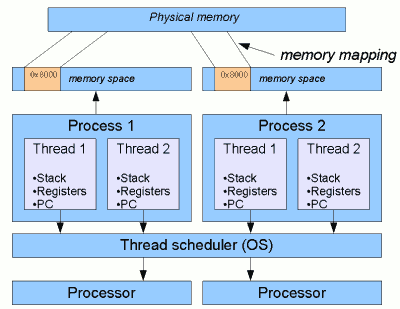

Put simply, a process may contain many threads.

In most multitasking operation systems, processes request their own memory space from the machine they are running on, while threads don't. Instead, they use only the amount of memory allocated to their parent process.

For instance, a JAVA Virtual Machine runs in a single process on the operation system. Threads executed on that Virtual Machine share the resources belonging to it. However, even though they share the same resource bank, each thread has their own stack space. This is how one thread's execution of a method is kept aside from another's.

Distributed systems

Distributed systems

Nowadays we no longer consider computers just as standalone

machines that help us work faster and increase our productivity. Instead,

computers are getting more and more interconnected, sharing their resources and

computing power.

Just like a single computer consists of many dedicated hardware

devices, each with a separate contribution to the whole system (for example – a

processor, fast speed operative memory, storage memory, graphics card, data

input devices and so on), a distributed system employs a similar architecture,

but on a more global scale. They are a collection of autonomous computers,

connected through network architecture, coordinating their activities and

sharing their resources in such a manner that end users perceive the system as

a single facility. Distributed systems could be allocated within a whole

building, why not city, country or even continent. The introduction of

distributed systems in an enterprise increases scalability, performance,

fail-safe operability and reparability, as data is not allocated entirely on

one unit, but shared among many. Thus, failure of a node does not critically affect

overall performance.

There are, however, some key differences between single and distributed

systems. Apart from, of course, the physical separation of the different

computers, there is no global clock. The explanation for this is pretty simple,

actually: each computer of the system has their own clock that runs at its

unique pace, pretty similar to the others, but still different. Therefore,

there cannot be a unique global time for the whole system and only local clock

is taken into account.

The other distinctive feature of distributed systems is

network delay, or network latency. Due to the fact that the physical distance

between nodes could be miles and more, and network links could be switched and

routed through many servers, latency is inevitable and needs to be respectfully

taken into account.

Depending on a distributed system’s design and settings,

different computers could work concurrently (or simultaneously) towards the

completion of a single task. This is not a set rule and can vary from system to

system.

Subscribe to:

Posts (Atom)